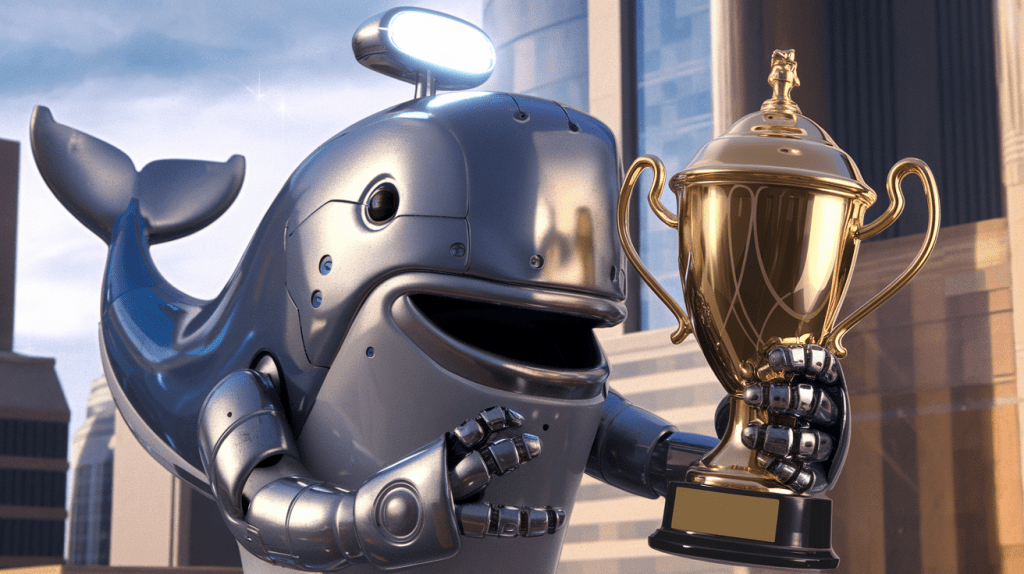

One New flagship AI models Saturday, Maverick, Meta released, It is second in the LM ArenaA test that compares the results of a test models with a human rating and choosing their choice. However, this is the Maverick version, which is a vivid version that is widespread for the developers of Meta, which is placed in the LM Arena.

Like a few AI Researchers He pointed out in the X, Meta, LM Arena said that Maverick’s « Experimental Chat version ». A graph over Official LLAM websiteAt the same time, the parent’s LM Arena test « Optimized Llama 4 Maverick » is used for retirees.

As we write beforeFor various reasons, the LM Arena has never been the most reliable size of the AI model performance. However, the AI companies generally did not adjust their models to collect better in the LM Arena or did not make it otherwise or to do it at least.

It is difficult to make a brand for a brand for a criterion, and then released the same model « vanilla », it is difficult to predict the model the model in certain contexts. And it’s misleading. Ideally, the criteria – as much as they are in an inadequate – Provide an image between the powerful and weaknesses of a model of a model.

Really there are researchers in X Observation Differences in behavior Openly downloaded Maverick compared to the model hosted by LM Arena. The LM Arena version uses many emojis and gives incredibly long-lasting answers.

Okay Llama 4, What is the city of Yap pic.twitter.com/y3gvhbvz65

– Nathan Lambert (@natolambert) April 6, 2025

For some reason, the Llama 4 model uses more emojis in the arena

together. AI looks better: pic.twitter.com/f74odx4ztt

– TECH DEV NOTES (@techdevnotes) April 6, 2025

We reached the organization, Meta and Chatbot Arena, protecting the LM Arena for comment.

Leave a Reply